A Lesson from Transformers: The key to success lies in team diversity!

Ali Mirzaei

September 11, 2024

The multi-head attention mechanism in transformers demonstrates the power of diversity in a team.

Keywords:

LLMs, transformers, multihead attention, diversity, teamwork

Introduction

A diverse team of skilled individuals can perform exceptionally, much like the multi-head attention mechanism in Transformers—simply put, just great! One of the standout features of applications like ChatGPT or Grok is their ability to analyse prompts from various perspectives, facilitating meaningful conversations. But how do they achieve this?

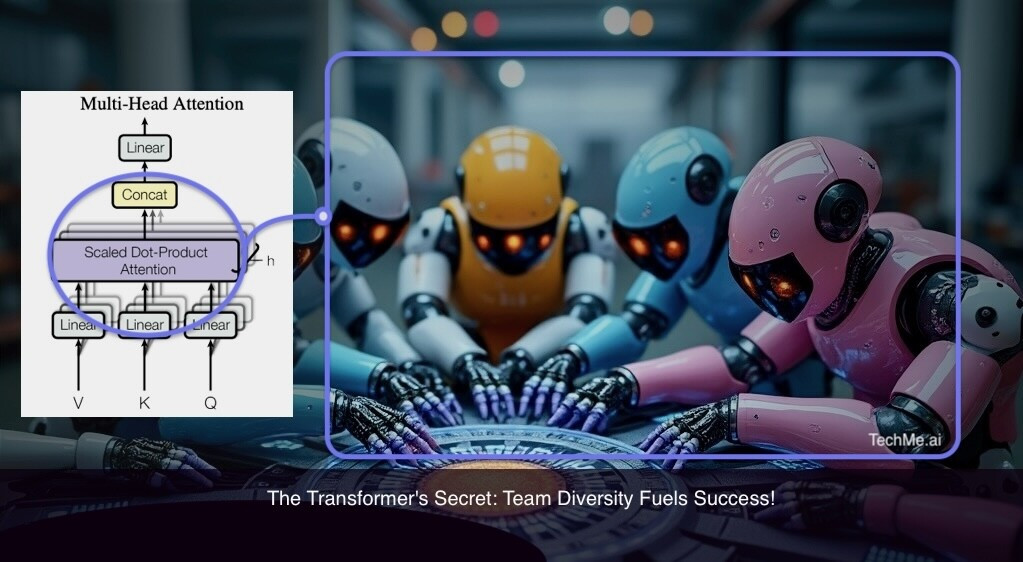

Multi-head attention mechanism: a diverse team in code

These chatbots and virtual assistants showcase the power of diversity through their architecture, mirroring the dynamics of an effective human team. They leverage Large Language Models (LLMs), whose architecture, known as the Transformer, utilises a mechanism called attention. However, a single trained attention mechanism might not always connect words in the most comprehensive way all the time, akin to a team with just one member—limited, possibly biased, and prone to errors.

Here's where the brilliance of transformers comes into play. They employ many trained attentions in parallel. This is called multi-head attention, just like a team of multiple individuals. In this system:

- Multiple attention heads are initialised randomly and trained independently on different datasets. Each head learns to focus on different aspects of language, ensuring a broad understanding and reducing the risk of oversight.

- Diversity in training mirrors a team where members come from varied backgrounds and trained in different environments. Each head, or team member, brings unique insights, allowing the collective to tackle complex problems from multiple angles.

Conclusion

The Transformer's multi-head attention mechanism isn't just a technical innovation; it's a lesson in organisational dynamics. It exemplifies how diversity within teams—be it in AI or human organisations—leads to more robust, creative, and effective solutions. Just as multiple attention heads ensure that no stone is left unturned in language processing, a well-organised team with diverse skills and viewpoints can navigate the complexities of any challenge, leading to success through collective brilliance.